Today marked my final lecture of the semester, which meant I (lovingly) got to talk non-stop about Data Science for an hour. Or, to express in an academic manner, summarise all the concepts covered in the name of revision.

While coming home, I had this thought of jotting down all my talks in one go, with the added challenge of making it interesting even for beginners. So, brace yourself for one flow Data Science summary in a (tad) simplified manner.

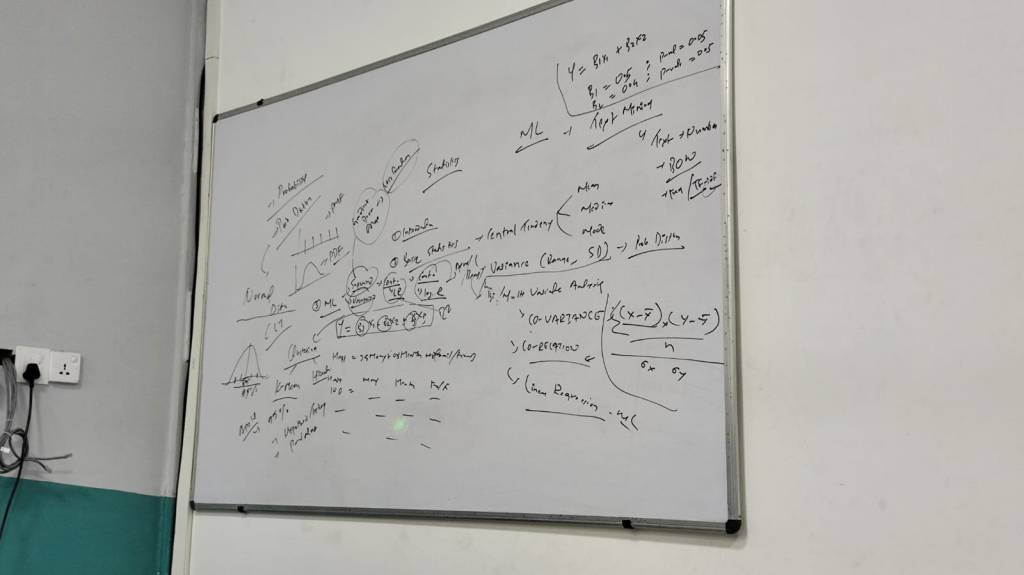

Let’s kick off with the basics, that is data — the collection of information. Imagine we’re in a room, and the data we have is individual wealth. To simplify this abundance of information, we turn to central tendencies, starting with the mean, which let’s say comes out to be 100K. That’s one easy picture to remember for our brain.

But what if Elon Musk joins the room? Now, even with the right answer, we get a misleading picture! Enter median and mode, saving us from skewed perspectives and outlier shenanigans.

Alternatively, we could shift from a one-point summary to two points. Enter Variance (or its square root counterpart, Standard Deviation), a measure of how varied or spread out our data is. Recall the tale of a 6-feet man drowning while crossing a 3-feet average river? Well, with this two-point summary system, at least we’re likely to survive.

Life, however, isn’t just about one variable; often we’re interested in relationships (okay, not talking about the romantic types here). More of relationships between the variables, like in our case, the relationship of individual wealth with let’s say age. One easy way to track this is to measure how one variable varies with respect to another, which is to measure covariance.

But beware of covariance: it is scale (unit) dependent. The relationship of wealth with age comes out different depending on whether it’s measured in rupees or dollars, which I know is super weird. But there’s a trick around. The bigger the scale, the bigger the covariance, and luckily for us, bigger the standard deviation. So, one simple way of making the measure scale invariant is to bring in the standard deviation into the equation. Cue correlation — the most used, and at times abused, metric in all of science.

Okay, don’t want to mention much about the “Correlation isn’t equal to Causation” cliche here, so that’s for you to look around. (Please google Randomized Controlled Trial!)

Up to this point, all is smooth and straightforward. But our curiosity about the world order and relationships doesn’t end there. We desire more insights, such as what happens to wealth if age increases by one unit. This entails calculating a gradient, a sophisticated term for slope we learned in high school (or dy/dx for those inclined towards calculus). The key difference here is that all points do not align on a single line; hence, we need to “learn” the best line that is generally close to all other points.

Until now, we’ve been navigating the Universe of Statistics. However, having encountered the learning aspect, it’s time to delve into the Universe of Machine Learning.

Before we dive into Machine Learning, let’s discuss the Model. Not only because people often confuse the two but also because it serves as a stepping stone into the realm of Machine Learning.

A Model is a conceptual representation of some real-world phenomenon, often approximated. Take Happiness as an example. If we wish to model Happiness, a simple approach is to model it as a combination of Money, Health, and Family & Friends. Imagine a top-notch psychologist (obviously some YouTuber) formulating this combination: Happiness = 0.5 * Money + 0.3 * Health + 0.2 * Family & Friends. (Let’s not judge the poor soul!) So, that’s an example of a model, and the weights assigned to the variables are parameters — the ultimate answers we seek in life.

But why settle for just one person’s opinion? Enter Machine Learning, where we collect data from countless individuals to allow the machine to learn the best weights through some special magic. The magic here — as always facilitated by mathematics — aims to find parameters that yield the least overall error (loss).

Similar to a fruit seller adjusting weights, our machine iterates through feedback loops to reach optimal parameters. Although it lacks the visual simplicity of a fruit seller’s pan balance, it exploits one cool mathematical property (that the slope at the minima/maxima is zero). Despite the nuanced technicalities, the bigger picture remains the same: through a series of iterations in a feedback loop, the machine arrives at optimal parameters.

If the outcome is continuous (like the Happiness score in our case), it’s a regression problem; if categorical (like Person A: Happy, Person B: Unhappy), it’s classification. As our learning is guided (supervised) by the output and error in a feedback manner, we call these types of algorithms Supervised Learning algorithms. (A simple memo to remember would be that Supervised Learning involves Input and Output data.)

But what if our data lacks output? Enter Unsupervised Learning, where we cluster similar groups together. While terms like Unsupervised and Clustering may sound sophisticated, trust me; it’s easy peasy, often requiring nothing more than high school distance calculation.

Before we end with this Happiness example, let me add in two important concepts. How did we come up those 3 variables? Well, in this case, I chose it randomly, or let’s say using some “domain knowledge”. But in the real world, when we have lots of variables to choose from, we can perform several techniques, a process called Feature Selection. (Variables are often termed as features in the Machine Learning Universe.) And at times, we can even engineer new variables/features from the existing features, which we call Feature Engineering.

Okay, we’ve almost hit the bell time! So, need to go mas rapido in these last few minutes, and talk about the remaining bits.

First, let’s talk about text data. How do we use the Statistical and Machine Learning techniques there? Remember the quote about a hammer man seeing every problem as a nail? Well, our machine also treats everything as numbers. The trick is to transform text data into numbers, using traditional techniques like Bag of Words or modern ones like Embeddings. This principle applies to images and videos too — as everything boils down to transformation into numbers.

Now onto the Neural Networks. Even with new cool architectures coming up right left and corner, the core principle remains the same: finding the parameters that best fit the data. And then there’s plenty of topics that we have skipped in the Universe of Statistics, like the important concept of probability (which pops up every time we’re dealing with uncertainty). Or, the world of distributions — some normal and some not so normal!

Closing with lasting thoughts, let’s reflect on how we learn in life. Most of the time, we learn from examples around us, with the feedback loop (pain/pleasure) helping us in the process. As you might have noticed, Machine learning follows a similar path. Just as life is a continuous process and the best way to enjoy it is to stay curious and learn, maybe we should adopt the same approach for learning Machine Learning and Data Science. One story at a time.

Leave a comment